Lab Members

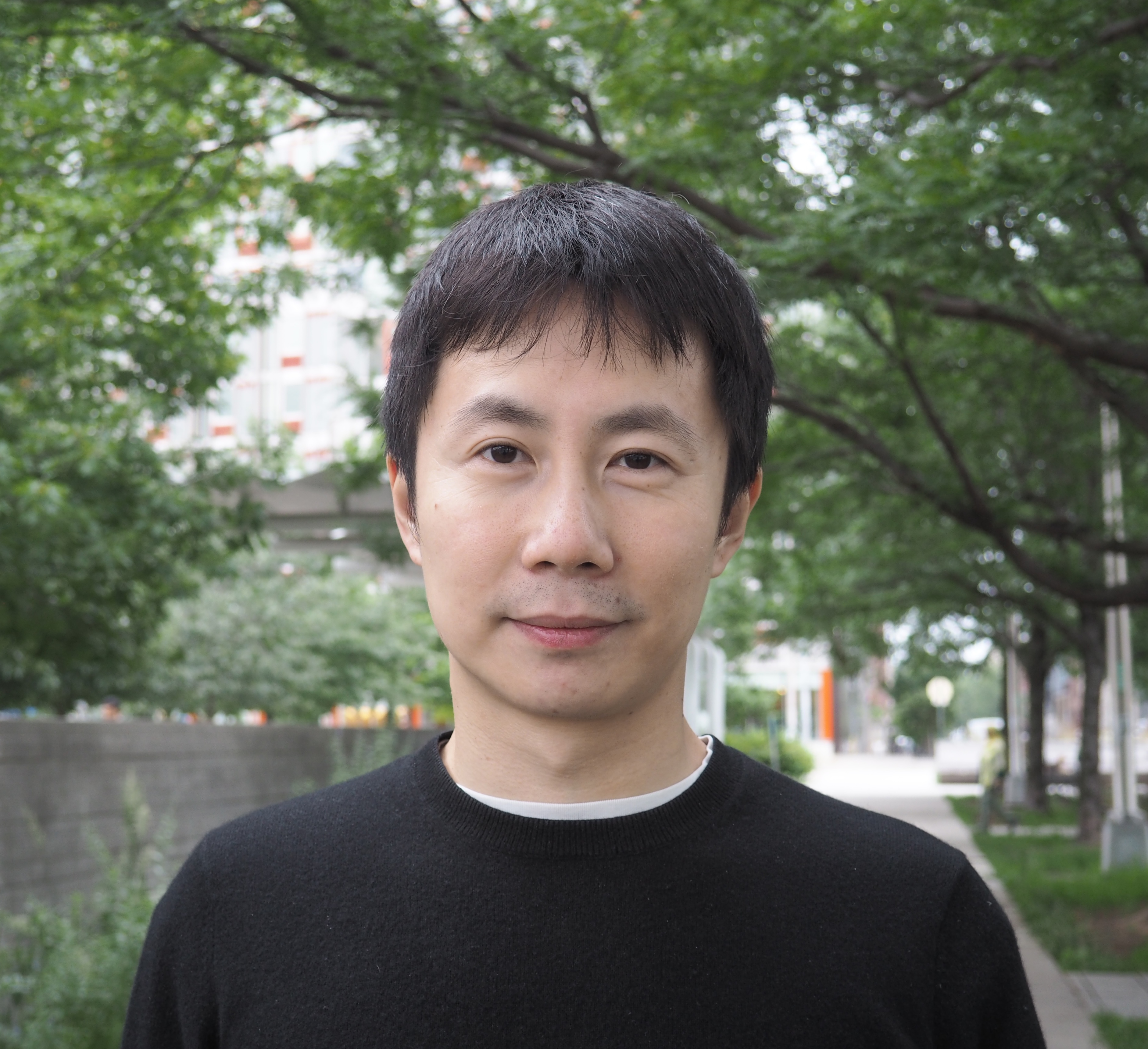

Yue Liu, Ph.D.

Principal Investigator

We study how neural circuit dynamics support flexible, generalizable behavior.

There is an ancient saying from China: “The wise delight in water; the kind delight in mountains” (智者乐水,仁者乐山). Indeed, the essence of intelligence lies in its fluidity—it allows us not only to master specific skills, but also to adapt and apply what we know across a wide variety of situations.

Imagine the first time you learned how to add two numbers. The teacher showed you a few simple examples and a set of rules. Once you grasped the rules, you could apply them to any numbers, solving infinitely many new problems. How can we do so much from learning so little? In other words, how do brains generalize from limited experience?

Our lab seeks to understand the neural circuit basis of fluid intelligence—our ability to use learned knowledge to solve new problems.

We approach this question through computational modeling and theory. We build neural network models that are informed by neurobiology and cognitive theory, and that can generalize to solve new tasks. By analyzing these models, we aim to uncover the mechanisms that support flexible reasoning and abstraction. We work closely with experimental collaborators to test and refine the hypotheses generated by our models.

This is a deeply interdisciplinary problem—one that has intrigued psychologists, linguists (how can we generate infinite sentences from a finite vocabulary?), neuroscientists, and AI researchers (how can artificial systems generalize out of distribution?). We believe that understanding the neural mechanisms of fluid intelligence will require insights from all of these disciplines. Such understanding could ultimately inspire the next generation of AI systems and inform new approaches to treating psychiatric and cognitive disorders.

How can a neural circuit learn abstract rules from a small set of examples and apply them broadly?

Mechanisms for decision-making and memory across timescales.

Using insights neuroscience to build more flexible AI systems.

Looking for postdocs and students interested in computational/theoretical neuroscience of cognition. Please contact me at yueliu@fau.edu if you are interested.

Principal Investigator

See Google Scholar for complete list of publications.

Florida Atlantic University – Department of Biomedical Engineering, Stiles-Nicholson Brain Institute

Email: yueliu@fau.edu